In recent years, large language models have achieved remarkable results on various natural language tasks. However, achieving systems that can seamlessly interact and cooperate with humans or each other remains an unsolved challenge. Interactive user-facing products, such as chatbots and voice assistants, tend to make obvious mistakes and as a result lead to frustrating customer experience. This might be the case because these networks “learn the language” (figuratively speaking) differently then humans do. Such models are passively trained on huge text corpora while children learn much more interactively. At the very beginning, they listen to the language adults use. Later on, they start to produce very basic forms of communication, with a handful of single words. Only over time, they come up with longer sentences and correct grammar. Most importantly, they continuously receive real-time feedback and their mistakes are corrected by others. What if we teach neural networks to communicate the same way we teach people?

Language emergence between agents

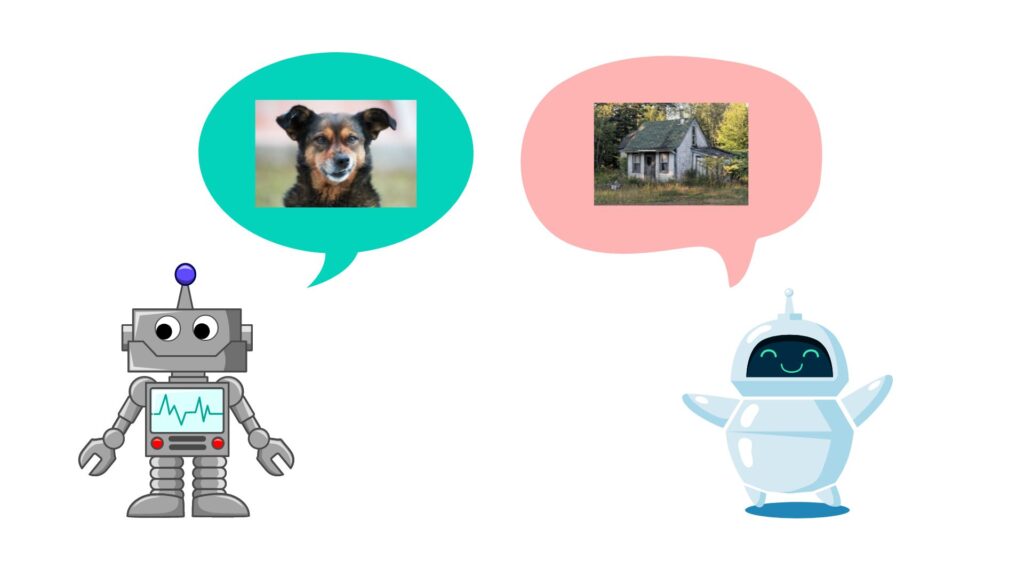

Developing cooperation in real world via natural language is extremely difficult. Therefore researchers initially started with simplified environments that require communication between multiple agents to achieve a common goal. We use an environment with two agents – the Sender and the Receiver. The Sender observes a randomly sampled image and sends a message to the Receiver which aims to describe the image. Afterwards, the Receiver is presented with multiple images and has to select the image seen by the Sender, solely based on the message passed between the agents. The images shown to the Receiver that are incorrect (not shown to the Sender) are call distractors. The goal is that the Receiver correctly selects the image that was seen by the Sender. The only way to achieve that is developing a common language between the two agents that will allow them to communicate. This environment is a variation of Lewis signaling game.

In practice, both agents are implemented as neural networks and a reinforcement learning objective is employed were reward of 1 corresponds to successful communication (receiver selects the correct image) and 0 to communication failure.

Communicating general concepts

Ideally, the message conveyed by the Sender describes high-level concepts of an image rather than small, pixel-level differences as this will lead to better generalization and robustness – insignificant perturbations in input images should also lead to small differences in the Receiver’s outputs. The Sender shouldn’t say: “The pixel in position (154, 986) is navy blue”, but rather “There is a boat with 4 people, sailing on the sea with a beach in the background.”. Unfortunately, Bouchacourt and Baroni (2018) showed that the agents indeed focus on tiny details which decreases the communication robustness.

We propose two novel objectives (or pressures) that leverage image classification to incentivize communication about high-level features: Multi-Label Binary image classification (MLB) pressure and Multi-Class Target image classification (MCT) pressure. We denote the regular loss used in signaling games that maximizes communication success as ![]() .

.

When using MLB pressure, for each image (the target image and distractors) the model additionally predicts whether it is of the same class as the target image using multilabel binary cross-entropy loss ![]() . The total loss is:

. The total loss is:

![]()

where ![]() is a weight determining the extent to which the additional loss impacts the total loss.

is a weight determining the extent to which the additional loss impacts the total loss.

For MCT pressure the model additionally predicts the class of the target image using a cross-entropy loss. The total loss is:

![]()

where ![]() is a weight determining the extent to which the additional loss impacts the total loss.

is a weight determining the extent to which the additional loss impacts the total loss.

Results

We use the CIFAR-100 dataset for our experiments. We vary the probability that a distractor image will be of the same class as the target image, ![]() . This is necessary because the dataset contains 100 different classes. Therefore, always drawing the distractor image from a random class would make the classification task too easy for the model, since predicting that the distractor image is of a different class than the target image would be correct most of the time.

. This is necessary because the dataset contains 100 different classes. Therefore, always drawing the distractor image from a random class would make the classification task too easy for the model, since predicting that the distractor image is of a different class than the target image would be correct most of the time.

Table below presents the signalling game and image accuracies for the baselines and both pressures. The standard signaling game accuracy shows how many times the receiver correctly selected the target image. When this metric reaches proximity of 100% we can conclude that the agents communicate successfully. Image classification accuracy shows the extent to which the signaling game is affected by the additional pressure, therefore it should be as high as possible.

We observe that for ![]() , the signaling game accuracy is comparable across all pressures. For

, the signaling game accuracy is comparable across all pressures. For ![]() there is a small performance drop in the signaling game accuracy of 0.032 for MLB and 0.037 for MCT when compared to the baseline. For

there is a small performance drop in the signaling game accuracy of 0.032 for MLB and 0.037 for MCT when compared to the baseline. For ![]() , we see a performance falls by 0.01 for MLB and by 0.041 for MCT. Furthermore, MLB achieves high image classification ccuracy across all three class probabilities. The image classification performance of MCT is not high, however it is far above random (random would be somewhere around 0.01 since there are 100 classes). The image classification accuracy for MLB is noticeably higher than for MCT. This is probably because MLB is a relatively simple binary classification task whereas MCT is a multi-label classification problem.

, we see a performance falls by 0.01 for MLB and by 0.041 for MCT. Furthermore, MLB achieves high image classification ccuracy across all three class probabilities. The image classification performance of MCT is not high, however it is far above random (random would be somewhere around 0.01 since there are 100 classes). The image classification accuracy for MLB is noticeably higher than for MCT. This is probably because MLB is a relatively simple binary classification task whereas MCT is a multi-label classification problem.

| Pressure | Signaling game accuracy | Image accuracy | |

| baseline | 0.0 | 0.976 | – |

| baseline | 0.5 | 0.955 | – |

| baseline | 1.0 | 0.945 | – |

| MLB | 0.0 | 0.973 | 0.918 |

| MLB | 0.5 | 0.923 | 0.856 |

| MLB | 1.0 | 0.935 | 1.000 |

| MCT | 0.0 | 0.972 | 0.379 |

| MCT | 0.5 | 0.918 | 0.455 |

| MCT | 1.0 | 0.905 | 0.414 |

Topographic Similarity is a measure based on the intuition that differences between representations of objects in the input image representation space should be similar to the differences between representations of objects in the message representation space (Lazaridou et al, 2018). High topographic similarity gives strong evidence that the additional tasks achieve the goal of making the communication more focused on high-level concepts in the images.

Topographic similarity score of MLB is 0.049, which is much lower than the baseline. MCT obtains a score of 0.176, 0.057 higher than that the baseline.

| Pressure | Signaling game accuracy | Topographic similarity | |

| baseline | 0.5 | 0.955 | 0.119 |

| MLB | 0.5 | 0.923 | 0.049 |

| MCT | 0.5 | 0.918 | 0.176 |

Conclusions

In this work we proposed an extension of the standard signaling game setup used to study language emergence. We saw that applying visual pressures leads to a signalling game accuracy competitive with the baseline, which means that the agents still achieve high communication success. The image classification accuracies are high for MLB and substantially higher than random for MCT, which means the additional tasks are learned and impact the model behaviour.

Since the topographic similarity score for this pressure is much lower than that of the baseline and the other visual pressure, we conclude that MLB is not a effective pressure to apply for our purposes. Secondly, we see that the MCT pressure obtains a noise accuracy that is close to random. MCT also has a substantially higher topographic similarity score than the baseline and MLB. This gives strong evidence that MCT successfully pressures the model to include high-level features in the messages.