There are many arguments showing that time is a fascinating concept. One of them is the general theory of relativity, but if you are like me and don’t like physics, here is a simpler example: for NLP geeks, early 2019 seems to have happened centuries ago, but for other people it was only last year.

Anyway, in this time BERT was still relatively new and I had to pick an NLP project I wanted to work on for my bachelor course. After some research I decided to look into sarcasm classification.

Why sarcasm classification?

Sentiment analysis is a very common task in NLP. Contemporary models obtain brilliant result on this task on various datasets obtained from the internet such as IMDB movie reviews, Yelp reviews etc. However, especially in texts in the internet, people often use sarcasm, which by definition flips the polarity of the text.

Sarcasm is a very subtle thing even for humans. How many times have you been in this awkward situation at a party when you weren’t sure if someone is saying something sarcastically or not? And this isn’t even the worse, at least when speaking you can indicate your intentions with tone etc, but the real difficulty starts with sarcasm with texting. Sometimes only knowing a person allows to correctly detect sarcasm. Okay, so we can all agree that sarcasm can be hard to catch between humans…now imagine asking a machine to do it.

To sum up, sarcasm detection is both an important and difficult – that’s why I decided to give it a go.

Data and modelling

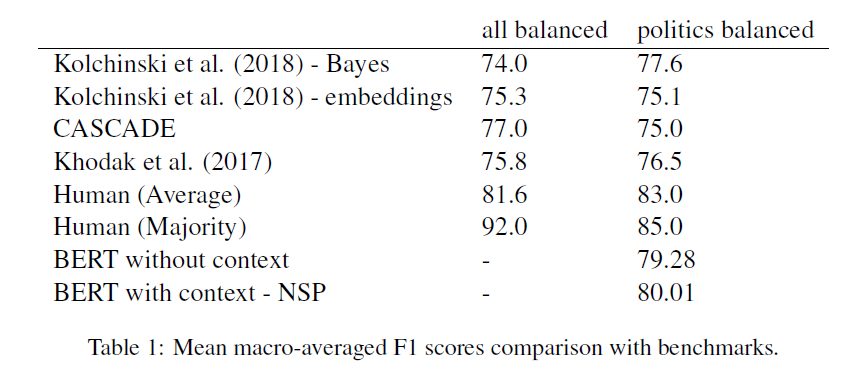

At the time, Huggingface was only taking its first steps so I used a notebook from BERT repository as a template and adjusted it for my needs. You can see the results here. I was lucky enough to find a huge dataset for this specific task called SARC. After finding two papers that evaluated on it, I gave BERT a try and managed to outperform both of them, as you can see in the table below.

Details

To find out more details have a look at my report (it’s just 3 pages).